Abstract

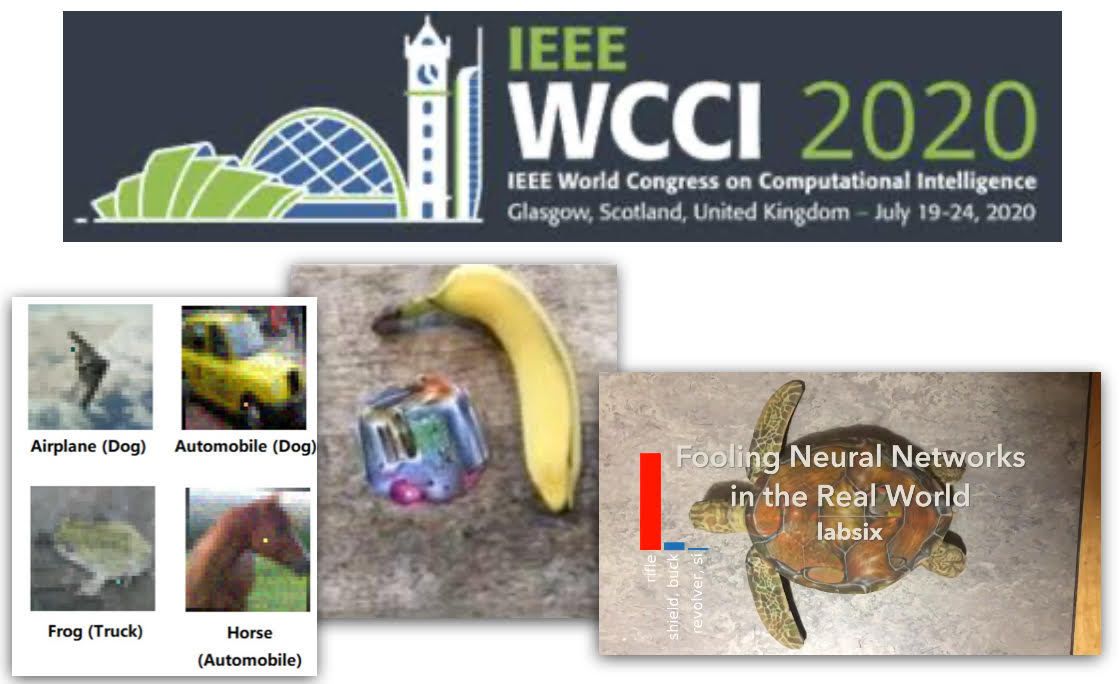

Recent research has found out that Deep Neural Networks (DNN) behave strangely to slight changes in the input. This tutorial will talk about this curious, and yet, still poorly understood behavior. Moreover, it will dig deep into the meaning of this behavior and its links to the understanding of DNNs.

In this tutorial, I will explain the basic concepts underlying adversarial machine learning and briefly review the state-of-the-art with many illustrations and examples. In the latter part of the tutorial, I will demonstrate how attacks are helping to understand the behavior of DNNs as well as show how many defenses proposed are not improving the robustness. There are still many challenges and puzzles left unsolved. I will present some of them as well as delineate a couple of paths to a solution. Lastly, the tutorial will be closed with an open discussion and promotion of cross-community collaborations.

Tutorial Presenter

Danilo Vasconcellos Vargas is currently an Associate Professor at Kyushu University, Japan. His research interests span Artificial Intelligence (AI), evolutionary computation, complex adaptive systems, interdisciplinary studies involving or using an AI’s perspective and AI applications. Many of his works were published in prestigious journals such as Evolutionary Computation (MIT Press), IEEE Transactions on Evolutionary Computation and and IEEE Transactions of Neural Networks and Learning Systems with press coverage in news magazines such as BBC news. He received awards such as the IEEE Excellent Student Award and scholarships to study in Germany and Japan for many years. Regarding his community activities, he was the presenter of two tutorials at the renowned GECCO conference.

Regarding adversarial machine learning, he has more than 5 invited talks about the subject. One given in a workshop in CVPR 2019. He has authored more than 10 articles and three chapters on books about adversarial machine learning, one of its research output was published on BBC news (about the paper “One pixel attack for fooling deep neural networks”).

Currently, he leads the Laboratory of Intelligent Systems aimed at building a new age of robust and adaptive artificial intelligence. More info can be found both in his

Homepage and

Lab Page.

Outline

-

What is Adversarial Machine Learning (AML) ?

-

Definition and Basic Concepts

-

What are Adversarial Samples?

-

Attacks Types

-

Black-box and White-box Attacks

-

Examples of AML

-

Attacks on Images

-

Attacks on Texts

-

Attacks on Speech Recognition

-

Real World Attacks

-

Python Example !

-

Understanding Deep Neural Networks (DNN)

-

The Deep Meaning of Adversarial Samples

-

Attacks as Tools for Understanding DNNs

-

The (Not So Bright) Present

-

Current Defenses

-

Obfuscated Gradients

-

The Future Ahead

-

Pieces of the Puzzle

-

Open Paths

-

Discussion and Cooperation Opportunities

Danilo Vasconcellos Vargas is currently an Associate Professor at Kyushu University, Japan. His research interests span Artificial Intelligence (AI), evolutionary computation, complex adaptive systems, interdisciplinary studies involving or using an AI’s perspective and AI applications. Many of his works were published in prestigious journals such as Evolutionary Computation (MIT Press), IEEE Transactions on Evolutionary Computation and and IEEE Transactions of Neural Networks and Learning Systems with press coverage in news magazines such as BBC news. He received awards such as the IEEE Excellent Student Award and scholarships to study in Germany and Japan for many years. Regarding his community activities, he was the presenter of two tutorials at the renowned GECCO conference.

Regarding adversarial machine learning, he has more than 5 invited talks about the subject. One given in a workshop in CVPR 2019. He has authored more than 10 articles and three chapters on books about adversarial machine learning, one of its research output was published on BBC news (about the paper “One pixel attack for fooling deep neural networks”).

Currently, he leads the Laboratory of Intelligent Systems aimed at building a new age of robust and adaptive artificial intelligence. More info can be found both in his Homepage and Lab Page.

Danilo Vasconcellos Vargas is currently an Associate Professor at Kyushu University, Japan. His research interests span Artificial Intelligence (AI), evolutionary computation, complex adaptive systems, interdisciplinary studies involving or using an AI’s perspective and AI applications. Many of his works were published in prestigious journals such as Evolutionary Computation (MIT Press), IEEE Transactions on Evolutionary Computation and and IEEE Transactions of Neural Networks and Learning Systems with press coverage in news magazines such as BBC news. He received awards such as the IEEE Excellent Student Award and scholarships to study in Germany and Japan for many years. Regarding his community activities, he was the presenter of two tutorials at the renowned GECCO conference.

Regarding adversarial machine learning, he has more than 5 invited talks about the subject. One given in a workshop in CVPR 2019. He has authored more than 10 articles and three chapters on books about adversarial machine learning, one of its research output was published on BBC news (about the paper “One pixel attack for fooling deep neural networks”).

Currently, he leads the Laboratory of Intelligent Systems aimed at building a new age of robust and adaptive artificial intelligence. More info can be found both in his Homepage and Lab Page.